Building a GPT-3 Personal Assistant

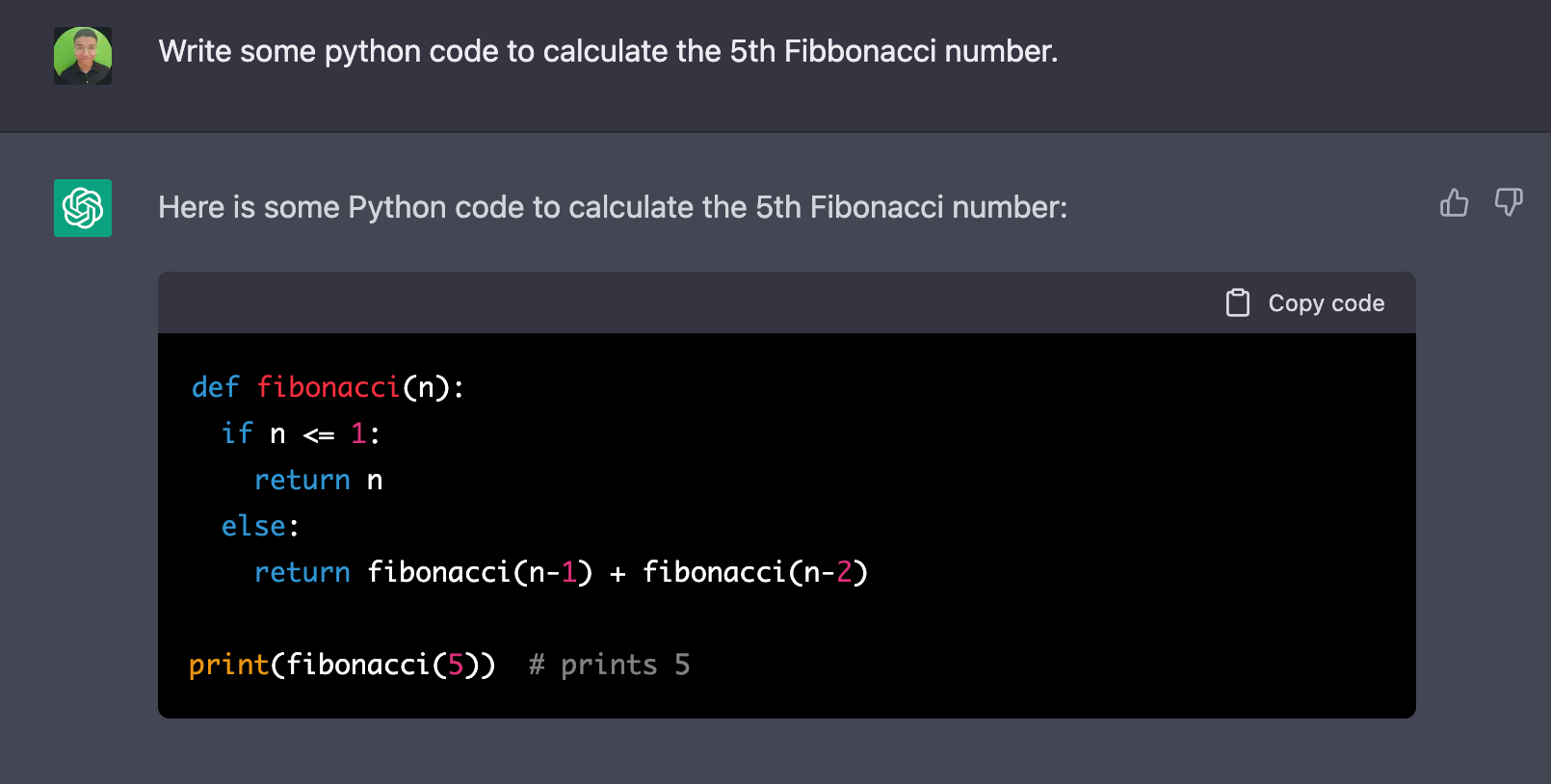

OpenAI has a publicly available API for their GPT-3 model, "text-davinci-003". This API provides a simple and clean interface for text completions. See the example below for a demonstration for just how easy it is.

import os

import openai

openai.api_key = os.getenv("OPENAI_API_KEY")

openai.Completion.create(

model="text-davinci-003",

prompt="Say this is a test",

max_tokens=7,

temperature=0

)One thing these LLMs (large language models) are good at is writing code.

If there is an example online, these LLMs can probably dredge it up. But it is possible that the appropriate solution for a given problem is the composition of multiple sub-problems, rather than being in the data set itself.

Problem Statement

So far, no commercial LLMs offer the additional capability to let the model browse the internet or even run the code that it writes. I want to change that. I want to see just how powerful they can be when given the ability to execute Python code.

The Solution

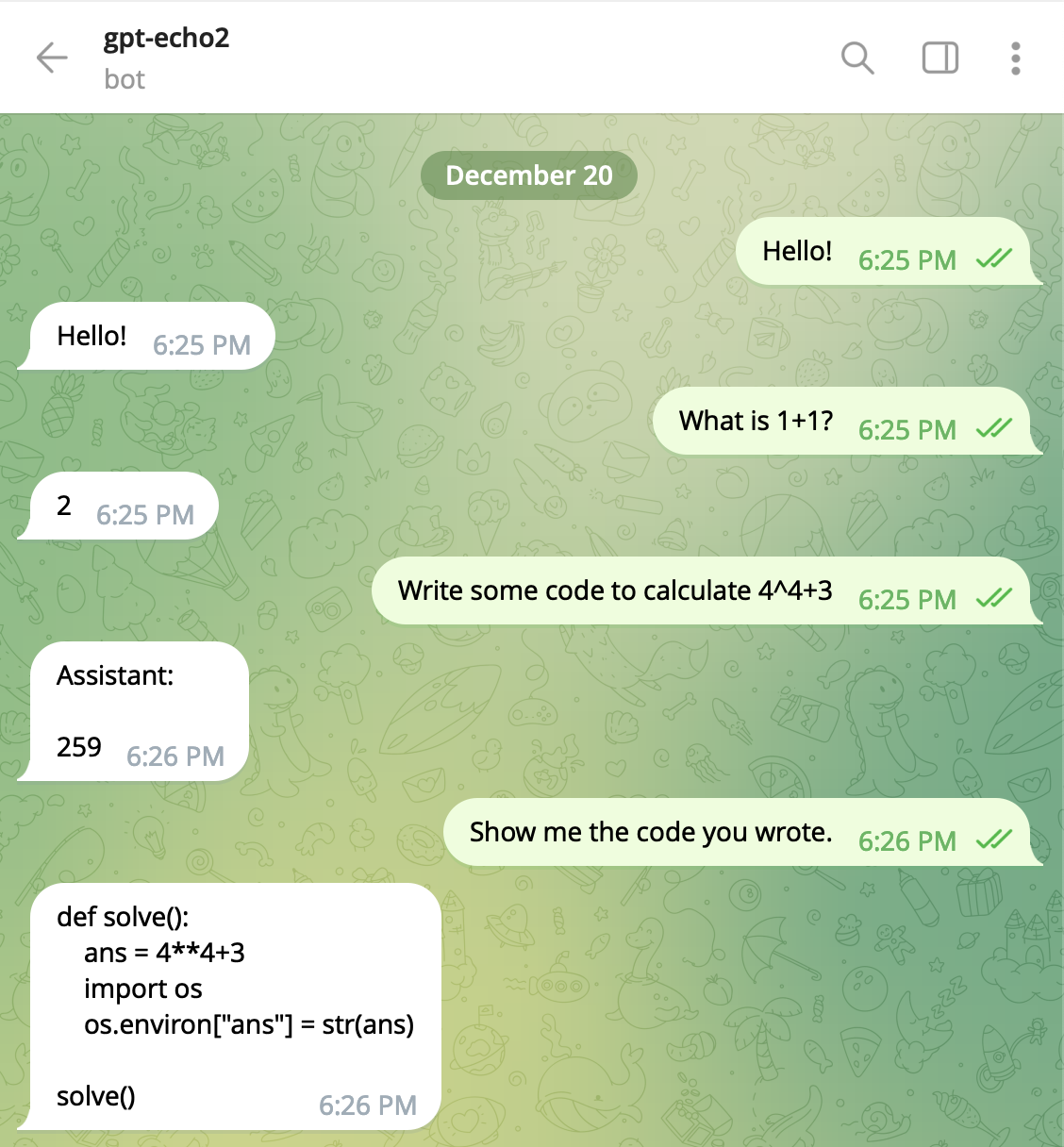

I built a GPT "client" that grants GPT-3 the ability to execute any python code by responding with a special directive at the beginning of its message. The results are astounding.

Take note how I have GPT load its results into an env variable. I compel GPT to always implement a function solve and write into a env variable ans to prevent any accidental namespace clobbering.

The asks can get pretty complex. For example:

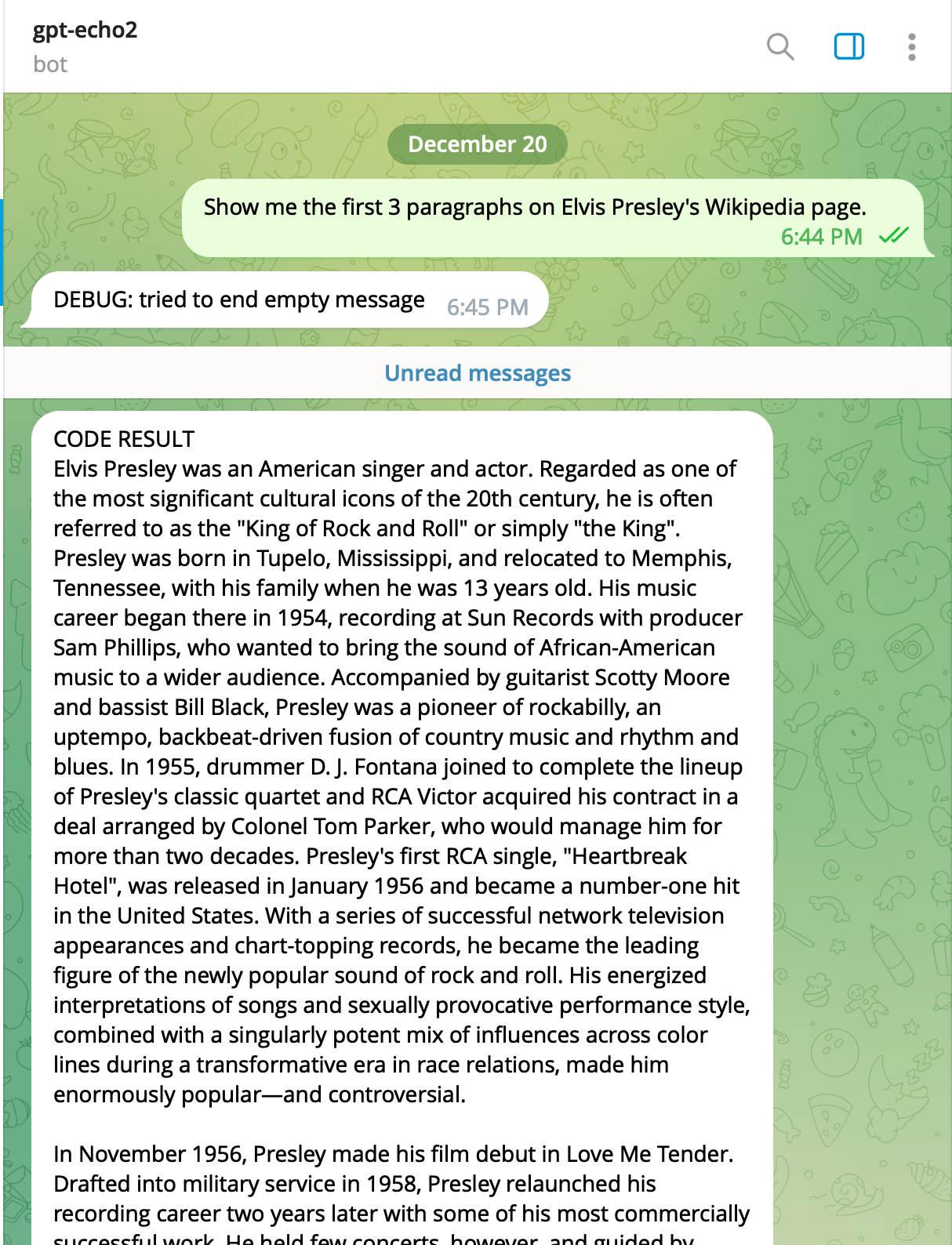

GPT-3 successfully wrote code, inspected the result and returned it to the human user. (me!) An issue with this implementation though is that it is expensive. I am having GPT-3 receive the text in a prompt and echo it back. I plan on resolving this by expanding the scope for how GPT can pass data back since right now it needs to read the results of what is loaded into ans itself.

Future Work

- Reduce few-shot training complexity. My prompts are too verbose and it is literally costing me.

- Add a "routing" layer of abstraction. Allow an instance of GPT to select among a few options for pre-trained LLMs that might be more suited to address the task at hand.

- Improve general reliability. GPT doesn't always respond with something functional. I need to improve the feedback loop for both GPT and the human so the surfacing of errors is more obvious and simpler to resolve.